Carbon Footprint API Integration Guide for Seamless Implementation

Karel Maly

June 19, 2025

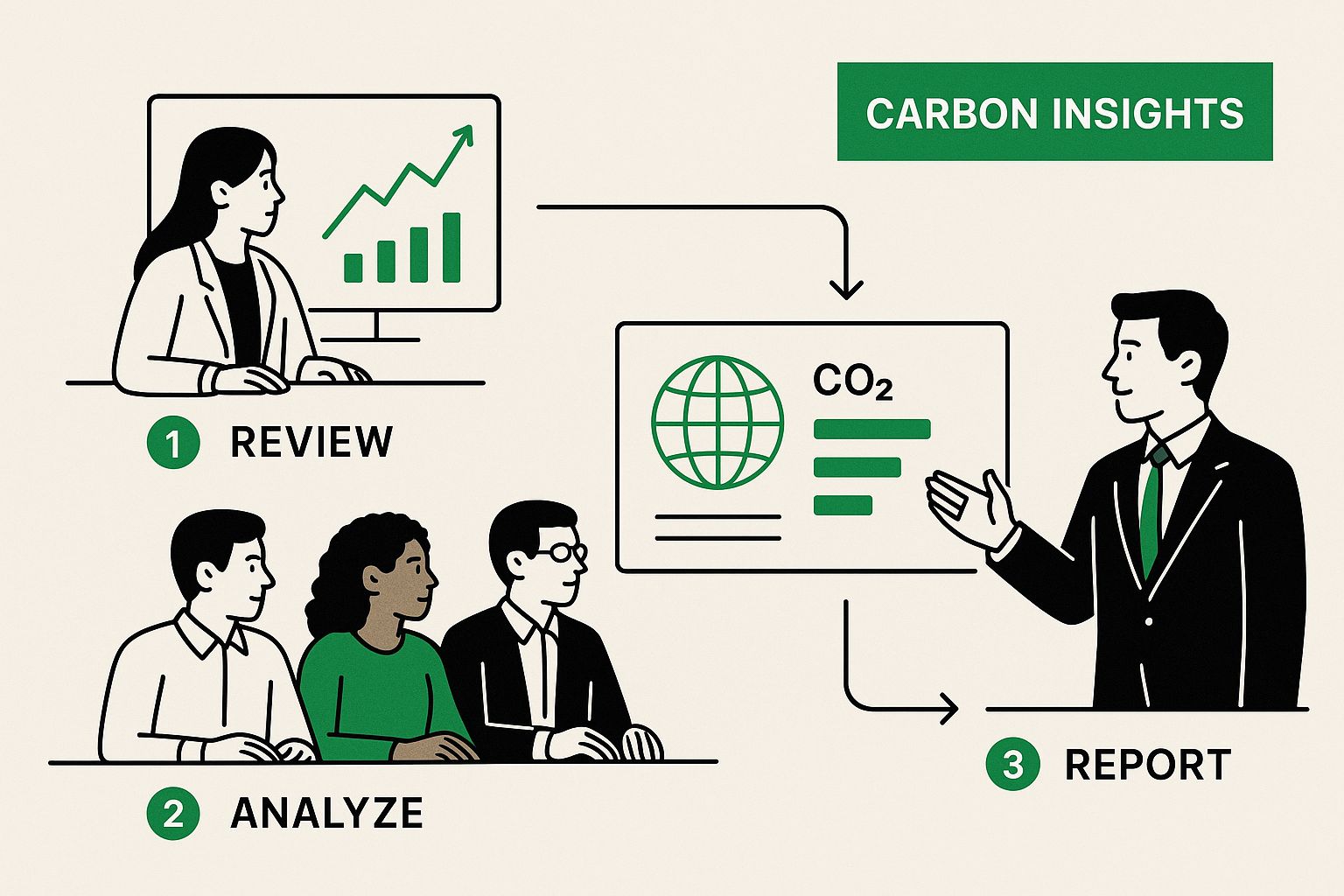

Understanding What Carbon Footprint APIs Actually Do for Your Business

Let's cut through the marketing noise and get to the heart of what a carbon footprint API integration genuinely offers. At its core, an API (Application Programming Interface) is like a dedicated translator between your business software and a powerful carbon accounting engine like Carbonpunk. It’s the tool that turns broad sustainability goals into precise, actionable data points right inside the systems you already use. Instead of manually keying data into a separate calculator, the API automates the whole thing, fetching information from your operations and sending back accurate emissions figures.

This automated data exchange is what separates a simple awareness tool from a proper enterprise solution. For a small business curious about its general impact, a basic online calculator might be enough. But for a logistics company juggling thousands of daily shipments, a robust API is non-negotiable. It provides continuous, real-time monitoring that embeds carbon data directly into your decision-making workflows.

From Vague Goals to Concrete Data

Imagine your logistics platform automatically flagging a specific shipping route as being 15% more carbon-intensive than an alternative. That's not a guess; it's a real-time calculation. The API grabs shipment details—like distance, weight, and transport mode—and instantly processes them using advanced emissions models. This transforms a high-level goal like "reduce shipping emissions" into a practical, operational choice that can be made on the spot. The key is translating raw business activity into a standardised metric: CO₂ equivalent (CO₂e).

This is especially important in regions with a significant industrial history. For instance, greenhouse gas emissions per capita in the Czech Republic, despite recent progress, are still among the highest in the EU. The average was recently recorded at 9.25 tonnes of CO₂e per person. For Czech businesses, particularly in manufacturing and logistics, a carbon footprint API offers a direct path to getting granular data and helping accelerate the country's positive shift away from its historical reliance on coal. You can discover more about the national emissions context and read detailed reports about the Czech Republic's climate performance on the CCPI website.

Here’s a look at a dashboard that visualises the kind of data a carbon footprint API can provide.

This visual breakdown makes it easy to spot high-emission areas at a glance, helping managers prioritise which operations need attention first.

Choosing the Right Integration Approach

Not all carbon APIs are built the same, and picking the right one comes down to your specific business needs. Timing is also key. Integrating a basic API might seem like a quick win, but it could lack the scalability you'll need for future reporting. A more sophisticated API might take more work to set up, but it will deliver the detailed, auditable data required for official ESG reports and compliance.

To help you decide, here's a breakdown of the different types of carbon footprint APIs and what they're best suited for.

Carbon Footprint API Types Comparison

Comparison of different carbon footprint API solutions including features, use cases, and integration complexity

| API Type | Primary Use Case | Data Sources | Integration Complexity | Best For |

|---|---|---|---|---|

| Basic Calculator API | Simple, one-off calculations for general awareness. | User-input data, fixed emissions factors. | Low | Startups, marketing campaigns, or educational tools. |

| Activity-Based API | Tracking specific, recurring business operations. | Logistics data (shipments, routes), energy consumption records. | Medium | Logistics providers, manufacturers, and transport companies. |

| Enterprise Solution API | Comprehensive, end-to-end supply chain monitoring. | ERP systems, IoT sensor data, procurement platforms. | High | Large corporations needing auditable data for compliance and ESG reporting. |

The key takeaway is that an activity-based API often hits the sweet spot for growing businesses, while a full enterprise solution is essential for large-scale, compliance-driven organisations.

Ultimately, a carbon footprint API integration is about creating a system that fosters real environmental improvement, not just checking a box. It provides the data backbone for smarter, more sustainable business decisions. To see how this applies on a personal scale, have a look at our guide on how to use a carbon footprint calculator to reduce your impact.

Getting Authentication Right Without the Headaches

Connecting to a new API often feels like a chore, especially when you're just trying to get a quick proof-of-concept running. Nobody wants to spend their first day wrestling with credentials instead of pulling meaningful data. For most developers tackling a carbon footprint API integration, the initial goal is simple: make one successful call to see what the data looks like. Thankfully, with modern APIs like Carbonpunk, this process is usually quite straightforward. The most common method you'll find is using an API key.

Think of an API key as a simple password for your application. You include it in the header of your requests, and the server uses it to identify and authorise your access. It's a clean and effective way to get started quickly. After signing up for an API service, you'll typically find your key in the developer dashboard. It is critical to treat this key like any other sensitive credential—don't hardcode it directly into your application's source code. A much better practice is to use environment variables to store it securely, which prevents accidental exposure and simplifies managing keys across different environments.

The Carbonpunk developer documentation provides a clear example of how to handle authentication.

As you can see, the documentation shows exactly where to find your API key and how to structure the authorisation header, which removes much of the guesswork.

Structuring Your First Connection Test

Your first API call shouldn't be a complex, multi-layered request. The main objective is just to confirm that your authentication works. A good starting point is to target a simple, lightweight endpoint, like a

statushealth checkOnce your basic connection is verified, you can move on to more complex queries. This is also the point where you should start thinking about security best practices that will save you headaches down the road.

Visualising these metrics is the ultimate goal, and it all begins with a secure and stable connection to the API.

To help you understand the landscape of API security, here's a comparison of common authentication methods you might encounter.

| Method | Security Level | Implementation Difficulty | Use Case | Example APIs |

|---|---|---|---|---|

| API Key | Medium | Low | Server-to-server communication, internal apps, simple integrations. | Carbonpunk, OpenWeatherMap |

| OAuth 2.0 | High | High | Third-party applications where users grant access to their data. | Google APIs, Salesforce APIs |

| JWT (JSON Web Tokens) | High | Medium | Securing RESTful APIs, single sign-on (SSO), stateless authentication. | Auth0, custom-built APIs |

| Basic Auth | Low | Very Low | Internal, low-risk tools or during early development phases. | Rarely used in modern public APIs. |

| mTLS (Mutual TLS) | Very High | High | High-security financial or B2B integrations requiring client verification. | Banking APIs, secure enterprise systems |

As the table shows, while methods like OAuth 2.0 offer robust security for user-facing applications, a well-managed API key is often the most practical and secure choice for server-to-server integrations, like the one you'd build with a carbon footprint API.

Handling Security and Rate Limits Proactively

While API keys are convenient, some enterprise scenarios might demand more advanced authentication, such as OAuth 2.0. This method is more secure for applications where users need to grant permissions without sharing their credentials directly. However, for most server-to-server carbon footprint API integration projects, a properly managed API key is perfectly sufficient and secure.

Two other critical aspects to consider from the outset are credential rotation and rate limiting.

- Credential Rotation: Regularly changing your API keys is a fundamental security practice. It's a good idea to set a recurring reminder to generate a new key and decommission the old one. This limits the window of opportunity for attackers if a key is ever compromised.

- Rate Limiting: Every API enforces limits on how many requests you can make in a given period. Before you write a single line of production code, check the API documentation for these limits. You should implement retry logic with an exponential backoff strategy in your code. This means if a request fails (for instance, you hit the rate limit), your application waits for a short period before trying again, then doubles the waiting time with each subsequent failure. This prevents you from overwhelming the server and getting your key temporarily blocked.

Turning Raw Data Into Accurate Carbon Calculations

Once you have authentication handled, you get to the core of the work. This is the stage where a carbon footprint API integration can either truly shine or hit a major roadblock. The real test isn't just about pushing data; it's about pushing the right data in the right structure. The operational data from your business—whether from energy bills, shipping manifests, or procurement software—is almost never ready to go straight out of the box. It tends to be messy, inconsistent, and sometimes has frustrating gaps.

Your job is to transform this raw information into a clean, structured format that the Carbonpunk API can understand and use to generate reliable emissions data. It's a bit like preparing a sophisticated meal. You wouldn't just toss ingredients into a pot and hope for the best. You have to prep everything first: wash the vegetables, measure the spices, and chop everything correctly. Data prep for an API follows the same principle, involving data mapping, unit conversion, and thorough quality validation. If you skip this, you’re setting yourself up for a "garbage in, garbage out" situation, where the carbon figures you get back are inaccurate and, frankly, unhelpful.

Mapping and Normalising Your Data for Consistency

The first practical move is to map your internal data fields to what the API is expecting. For instance, your logistics system might record a shipment's weight under the field

pkg_wgtweight_kgNext up is the critical job of data normalisation. Your systems might track distances in miles, weights in pounds, and fuel in gallons. A dependable carbon API, however, will usually want standardised metric units to keep all its calculations consistent. Your code needs to handle these conversions automatically. This is a common stumbling block; forgetting to convert miles to kilometres, for example, will throw off your emissions results completely.

A conversion that deserves special attention is for electricity consumption, as emissions factors can change a lot depending on the region and even the time of day. The carbon intensity of the Czech grid, for instance, is always in flux. In 2024, the average emissions were 402 grams of CO₂e per kWh, a significant improvement from past years. This number is shaped by the energy mix, where nuclear power generates 41%, coal 34.2%, and renewables contribute about 15%. To get accurate results, your integration needs to use the correct, current emissions factor for the specific location and time of consumption. You can learn more about the evolving energy situation in the Czech Republic and its emissions impact on Nowtricity.

Handling the Reality of Messy Data

Let's be realistic: no real-world dataset is ever perfect. You're going to run into missing values, typos, and strange outliers. A well-built integration is designed to expect these problems. Instead of letting a single missing

vehicle_type- Set sensible defaults: If the transport mode is missing but you know the route is short and within a city, you could default to a 'light commercial vehicle' and flag that record for a human to review later.

- Implement validation rules: Before you even think about sending data, run it through a validation check. This can catch things like text in a number field or an impossibly heavy weight for a small parcel.

- Establish a 'quarantine' process: If a record is too incomplete or fails validation, don't just throw it away. Log it to a separate file or database table for someone to look at manually. This prevents data loss and helps you spot recurring problems in your source systems.

This screenshot from the Carbonpunk documentation shows exactly how data should be structured for ingestion, clearly marking the required fields and their formats.

The clean structure shown here really drives home the point: sending well-organised data is essential for getting precise and trustworthy carbon calculations back from the API. Consider this structure your blueprint for a successful integration.

Making API Calls That Actually Work in Production

Once your data is cleaned and structured, you're ready to start talking to the API. This is where the theory of your carbon footprint API integration gets real and has to stand up to the demands of a production environment. Making solid API interactions isn't just about sending a request and getting something back. It's about designing efficient calls, truly understanding the returned data, and handling the inevitable hiccups of real-world use. Let's look at what separates a test call from a genuinely production-ready one.

First, get to know the most useful endpoints. The

calculatevalidateDecoding API Responses and Managing Data Structures

When you begin fetching data, you'll find that carbon API responses can be quite detailed, often arriving as nested JSON objects with breakdowns of emissions by scope, source, and activity. Your code must be able to parse this nested structure without failing. A frequent pitfall is writing parsing logic that's too rigid. If the API introduces a new, optional field down the line, an inflexible parser could crash. A better approach is to write your logic to be more forgiving, checking if keys exist before you try to access their values.

For example, with logistics data, the API might return emissions split by transport leg—one for the truck from the warehouse, another for the ocean freight, and a third for the final-mile delivery. Each leg has its own emissions data. Parsing this correctly allows you to see exactly which parts of a journey are the most carbon-intensive. To see how this applies to Czech businesses, take a look at our guide on carbon footprint tracking for logistics.

Here’s a snapshot of what a well-formed API request and its response can look like in a tool like Postman, which is brilliant for testing your calls before writing code.

The clear layout in tools like this helps you see the nested data and fix your parsing logic before it ever becomes a problem in your application.

Optimising for Performance and Reliability

As your application grows, performance becomes a serious consideration. Two vital strategies to focus on here are pagination and caching.

-

Smart Pagination: When fetching a large dataset, like a full year of shipment data, you can't request it all in one go. The API will make you retrieve it in "pages." A basic implementation might struggle under heavy load. A much better method is to use cursor-based pagination if the API offers it. It’s more effective than traditional offset-based pagination for very large datasets.

-

Intelligent Caching: Not all data needs to be fetched fresh every time. For emissions calculations based on fixed historical data (like a shipment that was completed last month), the result will never change. Caching these results locally can significantly lower your API call volume. The goal is to find the right balance—cache static results aggressively, but make sure you’re getting current data for recent or ongoing activities.

Finally, always plan for things to go wrong. APIs experience temporary outages, and network connections can be flaky. Your integration must be built to handle this. Implement a retry mechanism with exponential backoff to manage temporary failures like timeouts or

503 Service UnavailableBuilding Systems That Handle Problems Gracefully

An integration is only as good as its ability to handle failure. When your carbon footprint API integration runs into a problem—be it a brief network hiccup, a bug in your code, or an outage from the API provider—your system needs to react intelligently. Simply letting it crash or show a generic error isn't really an option, especially when business decisions depend on this data. Building a resilient system means going beyond basic

try-catchThis involves thinking about failure scenarios before they happen. What if the API is down for maintenance? What if a specific request is malformed and gets rejected? A truly robust system doesn't just fail; it degrades gracefully. This means it might temporarily switch off the carbon calculation feature while still letting users do other tasks, all while logging the problem and alerting your development team.

Intelligent Retries and Circuit Breakers

One of the most common issues you'll face is temporary failures, like a network timeout or a

502 Bad GatewayBut, if an API is completely down, retrying forever is pointless. This is where a circuit breaker pattern is really useful. It works just like an electrical circuit breaker: after a certain number of failures in a row, the "circuit opens," and your application stops trying to call the API for a set period. It can then periodically try a single call to see if the service has recovered. This stops your system from wasting resources on calls that are bound to fail.

Effective Monitoring and Alerting

You can't fix problems you don't know about. Good monitoring is vital for keeping an integration healthy. This is more than just checking if the API is "up" or "down." You need to keep an eye on key metrics that give you real insights:

- API Latency: Are response times suddenly getting longer? This could be an early warning sign of a performance issue.

- Error Rates: Track the percentage of

(client-side) and4xx

(server-side) errors. A spike in5xx

errors might point to a bug in how you're formatting data, while a surge in4xx

errors suggests a problem with the API provider.5xx - Data Quality Issues: Log cases where data doesn't pass validation. Are you suddenly seeing more records with missing fields? This could signal an issue in a system further upstream.

This monitoring dashboard from Carbonpunk gives you a clear, at-a-glance view of your integration’s health.

The dashboard clearly shows API call volume and error rates over time, making it easy to spot unusual patterns or spikes that need looking into.

This kind of proactive monitoring is especially important given how emissions data can change. For instance, the Czech Republic saw a significant drop in its carbon dioxide emissions, with a 9.09% reduction to 88,835 kilotonnes in 2020 alone. These trends reflect major policy and energy shifts. An integration that fails silently could miss these dynamic changes, leading to out-of-date or incorrect carbon accounting. You can discover insights into the Czech Republic’s CO₂ emissions on Macrotrends to learn more about these trends. Ultimately, a system designed for graceful failure makes sure your carbon tracking stays reliable, even when the technology behind it hits some turbulence.

Scaling Your Integration Without Breaking the Bank

Your first carbon footprint API integration might feel straightforward, perhaps tracking a few dozen shipments a day. But what happens when your business thrives and that number swells? A system that handles 100 API calls daily with ease can buckle under the pressure of 100,000. Scaling isn't just about managing more traffic; it’s about doing it smartly, preventing your API costs and server load from spiralling out of control. The key is to be proactive and optimise before you run into performance issues.

This is where tried-and-true strategies like intelligent caching and request batching make a real difference. Instead of firing off a separate API call for every new piece of data, why not group them? Most modern APIs, including Carbonpunk, are built for this. Sending a single batch request with 100 shipment records is far more efficient than making 100 individual requests. This approach seriously cuts down on network overhead and is gentler on both your system and the API's servers.

Optimising Performance for Large Datasets

As your dataset grows into the thousands or even millions of records, how you store and retrieve that information becomes critical. Just dumping everything into one huge table is a surefire way to create performance bottlenecks. A much better approach is to implement effective data storage and indexing strategies. For instance, if you often search for emissions data by date range, transport mode, or region, ensure those columns are indexed in your database. This simple tweak can reduce query times from minutes to mere seconds.

Another powerful technique is to create pre-aggregated summary tables. Instead of calculating total monthly emissions by scanning every single record each time, a background process can compute these totals daily and store them in a separate table. Your dashboards and reports then pull from this much smaller summary table, making them incredibly fast and responsive.

Here’s a glimpse of a performance dashboard built with a tool like Grafana, which is excellent for keeping an eye on these kinds of metrics.

Visualising metrics such as query latency helps you catch performance degradation as your data volume increases, allowing you to step in before it affects your users.

Balancing Real-Time Needs with Cost Efficiency

One of the biggest hurdles in scaling is finding the right balance between the demand for real-time data and the need for performance and cost management. Does every single carbon calculation really need to be updated instantly? Likely not. For historical analysis or high-level reporting, data that's a few hours old is often more than adequate. This is where smart data refresh strategies become invaluable.

You could implement a multi-tiered caching system:

- Hot Cache: For data related to live activities, like a shipment currently in transit, you might refresh the data every few minutes.

- Warm Cache: For data from recently completed activities, refreshing every few hours or once a day could be perfectly sufficient.

- Cold Storage: For archival data from last quarter or last year, the calculations are static. You compute them once and store them permanently.

This tiered method ensures your system stays responsive for critical, real-time scenarios while significantly reducing redundant API calls for data that seldom changes. For businesses managing large and complex supply chains, choosing the right tools is crucial. You can see how different solutions support these scaling requirements in our breakdown of the top business carbon footprint tool for enterprises. A well-designed, scalable carbon footprint API integration not only delivers accurate data but does so in a way that is sustainable for your infrastructure and your budget.

Key Takeaways for Carbon Footprint API Integration Success

Getting started with a carbon footprint API integration can seem like a big project, but a successful outcome really comes down to a few core ideas. Pulled from our own experiences in the trenches, these pointers offer a clear path to make sure your setup not only works but provides real value right from the start.

Start with Data Quality, Not Code

From what we've seen, the most common point of failure isn’t a broken API connection; it’s the quality of the data you're sending over. Before you even think about writing integration logic, spend your time understanding, cleaning, and mapping your source data. Your project's success is built on this foundation.

- Create a Data Dictionary: This sounds formal, but it’s a lifesaver. Explicitly map your internal field names (like

) to the API’s required fields (ship_wt_lbs

). This prevents guesswork and errors down the line.weight_kg - Normalise Units: You'll want to build reliable functions to convert all your measurements before they ever hit the API. Think pounds to kilograms, miles to kilometres, or gallons to litres. This simple step prevents a whole class of silent calculation mistakes.

- Establish Validation Rules: Your code should act as a gatekeeper. It needs to check for strange values, like negative weights or wrongly formatted data, before making an API call.

Design for Failure and Resilience

Let's be realistic: no system is up 100% of the time. A strong integration is one that expects problems and can handle them smoothly without needing someone to step in manually. This is how you build trust in both the system and the data it produces.

- Implement Exponential Backoff: If an API call fails because of a temporary blip like rate limiting or a network error, don’t just hammer it with retries. A better approach is to wait a few seconds, then double the waiting period for each following failure.

- Use a Circuit Breaker: When an endpoint keeps failing, your application should be smart enough to temporarily stop calling it. This saves resources and prevents a cascade of errors. The "circuit" can close again after a set time, letting requests flow once more.

Optimise for Scale from the Beginning

An integration that handles 100 transactions just fine can easily fall over when it hits 10,000. If you think about performance early on, you'll save yourself a massive headache and a lot of refactoring work later.

- Batch Your Requests: Whenever possible, avoid sending one API request for every single record. Grouping records into batches is far more efficient, cutting down on network chatter and improving throughput.

- Cache Smartly: Pinpoint which calculations won't change. For instance, the emissions for a shipment that was completed last month are fixed. Caching these results aggressively will lower your API costs and make your own application feel much faster.

Putting these practical strategies into practice will help turn your carbon footprint API integration from a technical chore into a genuinely valuable business tool. By focusing on data quality, building for resilience, and planning for scale, you're setting yourself up to deliver accurate, reliable, and actionable environmental insights.

Ready to transform your emissions data from a liability into an asset? Discover how Carbonpunk can automate your carbon accounting with over 95% accuracy and provide actionable insights for your supply chain.